A Coming Shift in the Fundamentals of User Experience

Ever notice that there are a surprising number of popular books about paradigm shifts? There’s “The Structure of Scientific Revolutions”, “The Innovator’s Dilemma”, and “Only the Paranoid Survive”, just to name a few. These books all discuss the situation in which for some scientific discipline or for some industry, the standard way of doing things is upended by a new, disruptive technology or idea, and everyone involved has to adapt.

Presently, it’s rather obvious that we’re right in the thick of the next big shift, and that this shift has something to do with computers getting good at dealing with ambiguity. Up till recently, they were only good at following very precise logical rules, but now, they’re getting good at assisting people with a wide range of rather ambiguous tasks. On top of that, between the AI researchers, the billions in investment, the new computer chip hardware for training models, and the new supercomputers built from those chips, there’s a lot going on to suggest that computers are about to get much better at those kinds of tasks very quickly.

This post presents a few predictions about how the shift in generative AI is going to play out in the realm of User Experience (or “UX” for short). I’ve spent nearly a decade and half working on a wide variety of UX-heavy projects, writing computer code, building graphical software interfaces, setting up physical hardware, and setting/reviewing metrics with a team. I’ve also spent a good bit of the past year closely following the revolution in Generative Artificial Intelligence, and it looks like there are some clear implications for the future of UX.

The Cross-Disciplinary Nature of UX

UX is a kind of wholistic, interdisciplinary term, meant to encompass everything to do with a person’s experience interacting with a product. The broadness of the term is the important part: if you send a graphic designer to solve a UX problem, and the graphic designer is only thinking about ways in which to rearrange the pixels on a screen, they might overlook a solution that involves redesigning the hardware, or changing the marketing/branding behind the product. The best UX choices often involve making tradeoffs between the design of the hardware, software, marketing, even the packaging that go into the ultimate end-user experience of using the product. The reason UX is in for a big shift is that there’s suddenly a new discipline to consider, and that’s the discipline of training and deploying AI agents.

Five Predictions for the Future of UX

1. Complex GUI Controls will be Replaced by Prompts to an AI agent

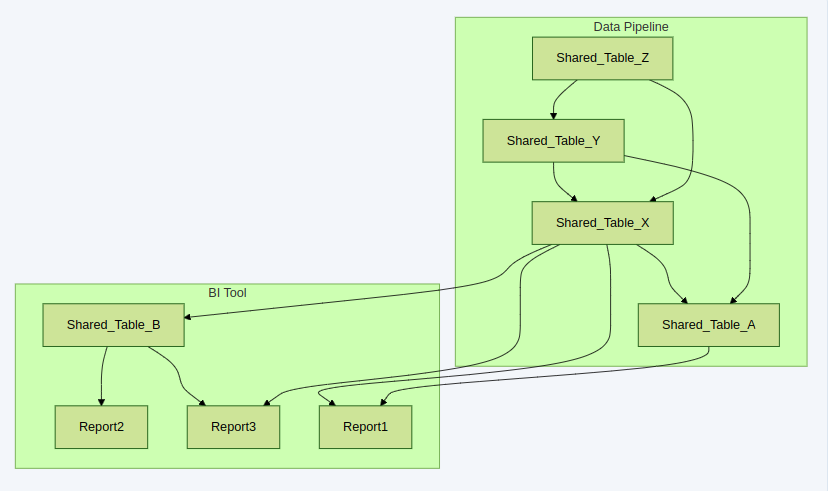

An AI assistant is already perfectly capable of operating your Graphical User Interface (GUI) for you in response to your prompts. For a simple task, it’s probably easier for you to use the GUI yourself, but for certain complex or elaborate tasks, an AI agent can finish an entire workflow for you in less time and with less effort than it would take you in the GUI. The folks at Anthropic have an AI “Computer Use” demo of how to allow an AI agent to operate GUIs that were originally designed for people. In my work for Quodor Data, I built a custom GUI, designed for editing Business Intelligence artifacts, and specifically configured to be easy for an AI agent to operate. The agents can execute multi-step workflows for you, write code and documentation for you, move snippets of code from one place in a pipeline to the next, track down and navigate you to a part of the UI, populate search bars, and all with a very impressive degree of accuracy. From everything I’ve seen, such agents already work quite well, especially when they’re using a GUI specially designed to be automated!

Once we expect that the end-user will perform certain complex tasks using prompts, then a lot of the existing GUI controls for those tasks become unnecessary. Think about which menus, wizards, modals, drag-and-drop, embedded DSLs, right-click menus e.t.c. could be replaced by a simple prompt to an AI agent. Super simple software (e.g. your calculator app on your phone) might not change much, but gis tools, 3d modeling and CAD software, business intelligence software, sound editing and video editing software are all great candidates for this kind of shift.

2. AI Safety will be Essential to Every UX Design

What’s the purpose of safety features? To let us do more dangerous things!

Notice how in the anthropic “Computer Use” demo mentioned above, the AI agent is deliberately confined to its own docker container? I suppose it’s important that if we’re going to give the AI agent free reign to point and click our mouse all over our computer, we’re going to need to have guardrails in place to keep it from doing something terrible. Even when a company is extremely certain that their AI agent is safe, the end-user might not be so willing to give the agent a chance if there’s a possibility it’ll do something very bad. The UX surrounding these agents will need to find ways to assure the end-user that he’s safe. In Quodor Data’s interface, for example, the AI assistant is only ever able to edit its own private copy of your business intelligence artifacts, making changes that are guaranteed to be visible to you alone until you’ve had a chance to review them and give them your stamp of approval. Whatever the interface, if it has any AI capabilities, the end-user is going to want to push the AI assistant to its limits, but wouldn’t dare do so without a clear safety mechanism to ensure that things won’t end badly.

Visual Patterns to Help Convey Safety

The safety aspects of the AI agents are going to be so important, that any good UI/UX design will need to establish a clear visual language around it. In the same way little red error text indicates that something is going wrong, there will need to be a standard set of visual cues to indicate which things are generated by you v.s. by your AI, which things are safe to share or not, and how to undo or dispose of unwanted changes made by the AI agent.

3. The end-user’s role in creative tasks will involve less generating content, and more reviewing, undoing, correcting and tweaking the content generated by an AI agent.

For many User Experiences, the key design consideration will no longer be to make it easy for the user to make changes. Instead, it will be to let the user easily evaluate whether or not the changes made by an AI agent are any good, and to perform undoes and tweaks to correct the AI agent’s work. Just as in the prediction about Safety, it’s not enough for the AI agent to do the task correctly, it must also make it clear to the end-user that it was correct.

Redesigning interfaces this way is no small challenge. With a user making edits himself, he’s always aware of the changes, and they’re generally right there in front of him. If the AI agent, on the other hand, is making changes to parts of the interface that are out-of-sight, the user will still need to know what happened and have some way of evaluating whether it was good or bad.

4. UX people will constantly monitor for the “edge” of their AI agent’s capabilities and communicate it to end users

Whenever you test out an AI agent, you can find some tasks that it does well and some that it does poorly. A key concern for UX designers that use AI agents will be to determine where that edge lies, to keep track of it as it moves around, and to clearly set expectations with the end-user about what the AI agent can and can’t do. UX people will have to pay attention to AI quality tests, and will need to quickly reassess what an AI is capable of when someone makes an improvement to a part of the agent. They’ll also have to have some way to let users know when the AI agent can be trusted to do something new.

5. The Best Agents Will Need all the Context they Can Get

The more relevant context an AI agent has, the better it’s going to be at any task. For an agent that’s part of a user experience, that agent should ideally know everything it can about the end-user. It should know what they’re looking at now, what they’ve done recently, what their coworkers are doing, and everything it’s safe to know about the underlying data they’re working with. As long as you’ve addressed any risk of the agent leaking sensitive information to where it’s not supposed to be (see the above prediction on Safety), then the more context it has access to, the better.

Getting this context to an AI agent in an efficient way is no small task. In fact, much of the software people use might need to be rewritten so that it can communicate that context in a more efficient manner. For example, you might not want to pay to send a video stream of your laptop to an AI agent, and you might not want to send it a copy of all the html and css in your web browser at all times, but if you’re using a web frontend that’s designed from the ground up to have a very succinct representation of what you’re looking at and doing, then you’ll be able to feed that data to AI agent in a way that doesn’t break the bank.

This point about context is a good reason to expect that end-users will prefer workflows in which they interact with very few distinct agents. If you’re starting a task based on an email you received, but the AI agent that helps with your emails is different from the one that helps with the task, you’ll probably wind up spending time copy-pasting relevant information between the two agents …… and that’s probably not a good use of a person’s time.

For which kinds of Products will these UX changes be important?

While I think it’s pretty clear that the changes described above would be advantages for any company to implement, there are some products for which they might make a vastly bigger difference. It’s always struck me how in certain circumstances, a UX improvement can lead to a huge increase in market share, even when the product does kind of the same thing as the competitors. Apple is probably the most well known example of this. Many tech people never understood the appeal of what looked like a more expensive computer that does basically all the same stuff as the cheaper ones, but with a mildly different interface. As it turns out, those seemingly small UX improvements were enough to convince a lot of people to pay a premium for Apple products. The lesson here is that it’s easy to underestimate the impact of what looks like a small UX advantage. For the UX advantages described above, here are the kinds of situations in which they’ll probably have a very large impact on company market share:

- Companies for which the complexity of using their software is a major drawback to the end-users. Good UX is valuable because it reduces complexity, time-spent and cognitive load. So for products in which those things are a problem, the impact of better UX will be much larger.

- Any software product that has a steep learning curve, for similar reasons as point 1 above.

- Any situation where the end user spends a lot of their time interacting with the product, again for the same reasons as point 1 above.

- Mature markets with lots of players selling products with very similar features. If users can do pretty much all the same things with one product as with another, the UX and the price will wind up being main differentiating factors.

All those factors come together in the case of Business Intelligence products. It seems like a lot of people have picked up on that, and so that’s why we’re seeing so many young disruptive companies working on some kind of AI-enhanced BI tool.

Summary

Large disruptive technological shifts seem to come around about once in a generation. There’s one going on right now involving computers getting good with ambiguity. That shift comes with a whole number of implications for how User Experience design will have to change in the future. This post presented a number of specific predictions for the ways in which UX will have to change, and wrapped up with a discussion of which kinds of products and companies will probably be most impacted by these changes. I’m mainly focused on how they’ll change the User Experience for the upcoming wave of new Business Intelligence tools, but there are a lot of spaces in which something similar could take place.